Published on

PostgreSQL Schema Health Metrics

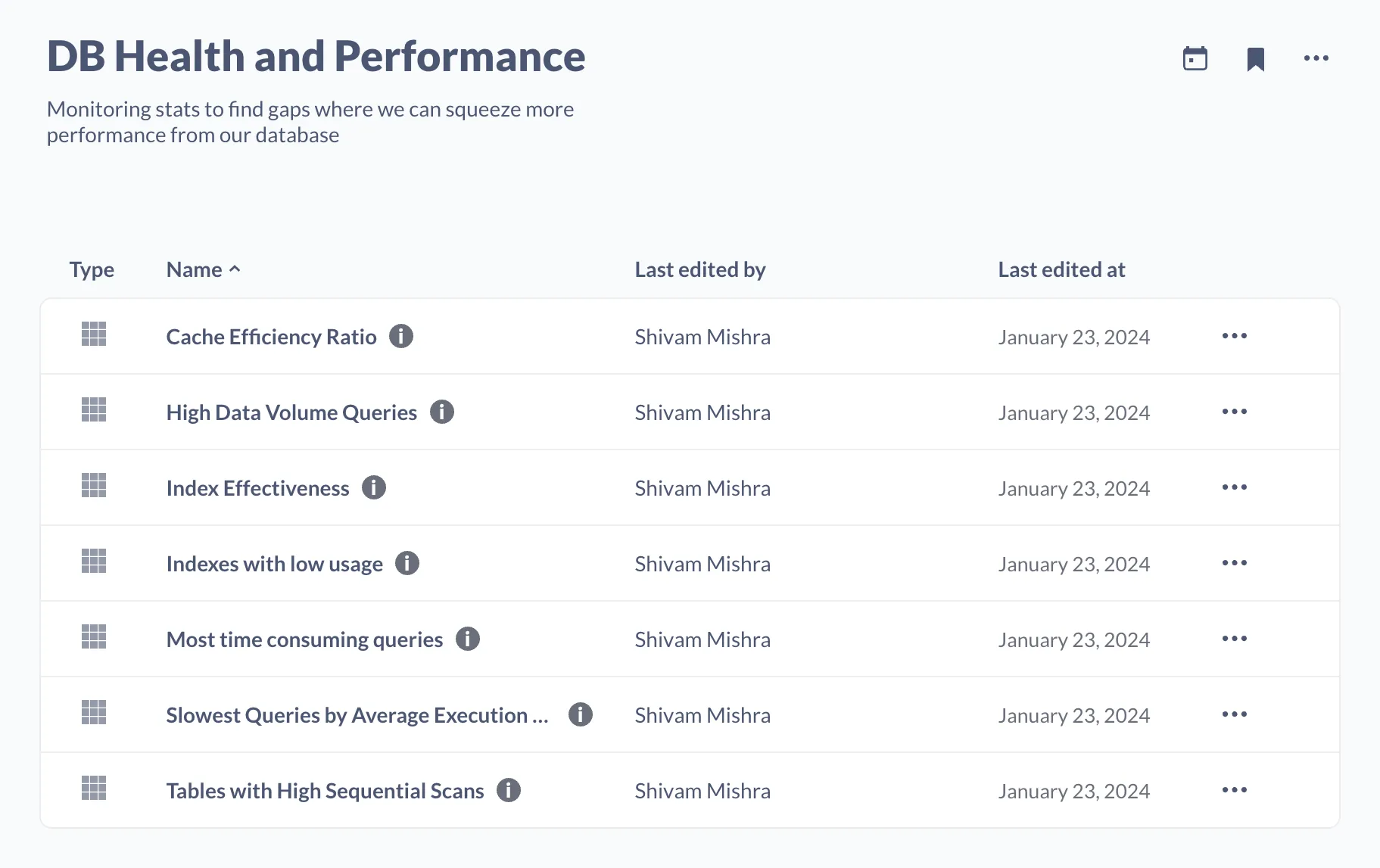

Often overlooked in database management, schema health metrics become pivotal when traditional scaling methods are no longer cost-effective. PostgreSQL, for instance, provides detailed insights through straightforward queries or plugins. Utilizing tools like Metabase for dashboarding, these metrics offer invaluable assistance in debugging.

This post mentions specific metrics that I monitor and how to interpret them. Although not particularly useful alone, they can significantly aid in optimizing query performance and shaving off that last ten milliseconds when used alongside debugging slow queries.

I monitor these metrics using Metabase as a dashboard; you can choose any platform that suits you. The queries are written for PostgreSQL, so you can employ them in any SQL client; however, some of these queries need you to have pg_stat_statements extension installed.

Stats to track

Cache Efficiency Ratio

SELECT relname, heap_blks_read, heap_blks_hit,

(heap_blks_hit / NULLIF(heap_blks_hit + heap_blks_read, 0)) AS hit_ratio

FROM pg_statio_user_tables

ORDER BY hit_ratio ASC, heap_blks_read DESC

LIMIT 10;

The hit_ratio represents the proportion of times data requested from a table was found in the cache (memory) instead of being read from the disk. Achieving a high hit_ratio in a heavily read table dramatically improves response times, especially if you’re building something like real-time data analytics applications.

Low cache hit ratios suggest performance issues due to frequent disk reads. This situation often arises when tables are too large for the available cache, or the workload involves diverse data access, leading to insufficient cache utilization. Optimizing this involves increasing cache memory, improving query patterns, enhancing indexing strategies, or data management techniques like partitioning.

heap_blks_read and heap_blks_hit provide raw numbers of block reads from disk (heap_blks_read) and block hits in cache (heap_blks_hit). Tables with high heap_blks_read and low heap_blks_hit are good candidates for optimization.

Index Effectiveness

SELECT relname,

indexrelname,

idx_scan,

idx_tup_read,

idx_tup_fetch,

CASE

WHEN idx_tup_read = 0 THEN 0

ELSE (idx_tup_fetch::FLOAT / idx_tup_read)

END AS effectiveness

FROM pg_stat_user_indexes

JOIN pg_indexes ON indexrelname = indexname

WHERE idx_scan > 0

ORDER BY relname, idx_scan ASC;

The index effectiveness query evaluates the efficiency of database indexes by comparing the number of rows fetched by index scans to the total rows read. A high ratio suggests the index effectively narrows down results, enhancing query performance. This assessment helps identify underperforming indexes for optimization.

Index with low usage

SELECT s.relname,

s.indexrelname,

pg_relation_size(i.indexrelid) / (1024 * 1024 * 1024)::FLOAT AS size_gb,

s.idx_scan

FROM pg_stat_user_indexes s

JOIN pg_index i ON i.indexrelid = s.indexrelid

WHERE s.idx_scan < 50

ORDER BY size_gb DESC;

This query identifies indexes with low usage (less than 50 index scans) and provides their sizes in gigabytes. These indexes might be candidates for removal to optimize database performance and storage efficiency.

If you’re maintaining large tables, eventually, you will need to perform index maintenance to ensure optimal performance. This includes removing unused indexes, which can be a significant overhead on write operations. This query helps identify such indexes.

Tables with high sequential scans

SELECT relname, seq_scan, idx_scan

FROM pg_stat_user_tables

WHERE seq_scan > idx_scan

ORDER BY seq_scan DESC

LIMIT 20;

This query is excellent for surfacing low-hanging fruits that you can fix immediately. In most cases, this is a sign that the table is improperly indexed or that the indexes are not utilized.

High Data Volume Queries

SELECT query, rows, calls, rows / calls AS rows_per_call

FROM pg_stat_statements

WHERE calls > 100

ORDER BY rows_per_call DESC

LIMIT 10;

This can identify frequently executed queries returning many rows per call. Helpful in pinpointing performance-impacting queries needing optimization like selectivity refinement or pagination. You won’t be able to optimize all these queries, but it’s a good starting point to identify the most expensive ones.

Most time-consuming queries

SELECT query, total_exec_time, calls, mean_exec_time

FROM pg_stat_statements

ORDER BY total_exec_time DESC

LIMIT 10;

This query aggregates performance data over time for all executions of each query including total execution time, mean execution time per call, number of calls, etc. This can help identify queries that are consuming the most time and are good candidates for optimization. However, it’s important to note that this query can be misleading if the number of calls is low, so it’s also essential to look at the number of calls.

Average slowest queries

SELECT query, calls, mean_exec_time

FROM pg_stat_statements

WHERE calls > 100

ORDER BY mean_exec_time DESC

LIMIT 10;

This is a slight variation of the “Most time-consuming” query, focusing on the average duration of individual executions of a query. This can help find queries that are typically slow each time they run; these are usually inherently inefficient or complex queries.

Often, this can surface queries that, when optimized, can significantly impact the application’s overall performance.

Gotchas

It’s important to know that interpreting these metrics in isolation may provide a partial picture; it’s essential to combine them with other performance analysis techniques, such as query profiling and database tuning, to achieve comprehensive performance optimization.

Another thing to know is that sometimes, it is too early to look at this. Engineering teams can make the most of any DB with very simple indexes. Eventually, as you grow, you will know when to start looking at these metrics and meaningfully use them.

If you notice, we have accessed data from the pg_statio_user_tables, pg_stat_user_indexes, and pg_stat_statements tables; while these hold a lot of insights waiting to be discovered, several factors can influence the accuracy and relevance of this data:

-

Resetting Statistics: PostgreSQL allows resetting statistics, which means you can clear the data from these views. If the statistics are reset frequently, the data might not accurately represent long-term trends or usage patterns.

-

Sampling: For performance reasons, some statistics, especially those from

pg_stat_statements, might be based on sampling rather than exhaustive logging of every event. This approach ensures minimal overhead on the database performance but can sometimes lead to less precise data. For instance,pg_stat_statementswill capture only 5000 statements by default, and you can configure it to capture more. [ref] -

Query Normalization: The

pg_stat_statementsextension normalizes queries to group similar ones together, which is beneficial for identifying patterns and hotspots. However, this means that the specific details of individual query executions, such as the exact values of parameters, are not preserved in the statistics.

References

You can read some of my favourite articles if you’re interested in more such content.

- TimescaleDB - Using pg_stat_statements to Optimize Queries

- Zerodha Engineering - Working with Postgres

📫 Get updates!

I'll send an email only when I publish something on my blog, which is usually once month at best, and you can unsubscribe instantly at any time.

No spam, unsubscribe at any time. Scout's honor.